Áudio

This document describes the architecture of the audio feature. Based on the observer pattern, it ensures flexibility in dynamically adding or removing audio connections between real and fictitious participants while maintaining clear handling for moderation scenarios.

Real participants

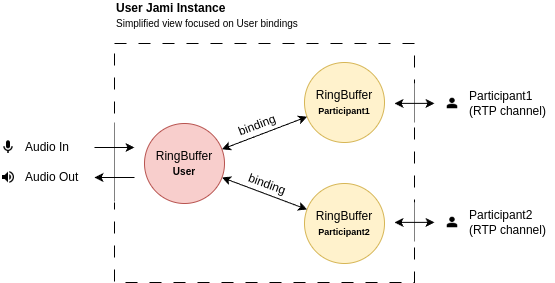

Each real participant has:

A circular buffer (RingBuffer) to store incoming audio.

A list of incoming bindings, representing the participants whose audio they perceive.

The outgoing audio of a participant is the sum of the audio streams from their incoming bindings.

For example, if a user’s incoming bindings include Participant1 and Participant2, the audio they perceive is the sum of the audio streams from those two participants.

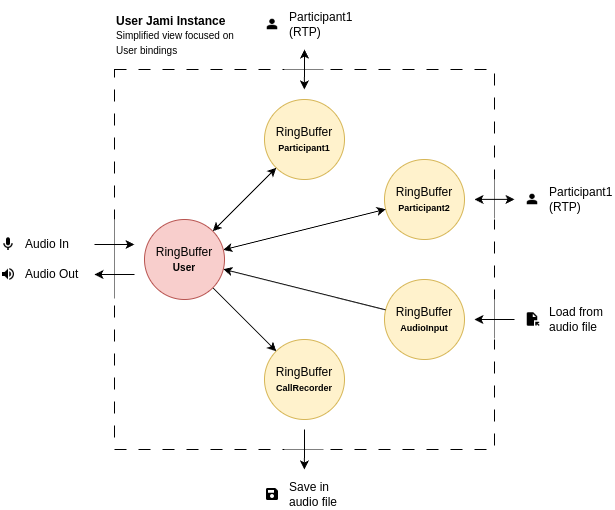

Fictitious participants

These are specialized entities that can interact with the call without representing real people:

CallRecorder: Records the call audio to a file.

AudioInput: Plays audio from a file or shares audio from an external source (for example, during screen sharing).

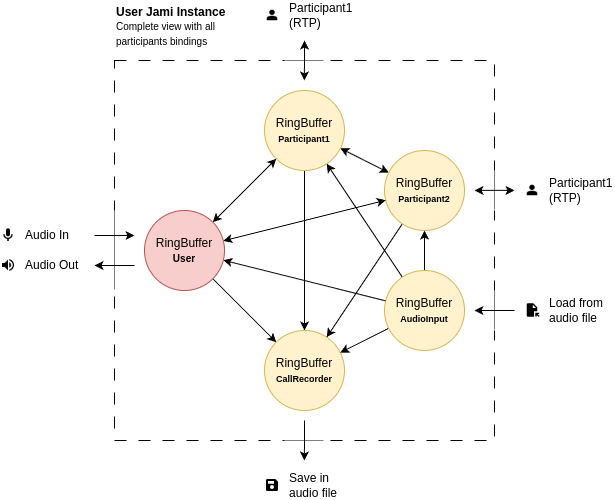

Global interconnection

All participants (real and fictitious) are interconnected, enabling audio transmission and reception based on their binding configurations.

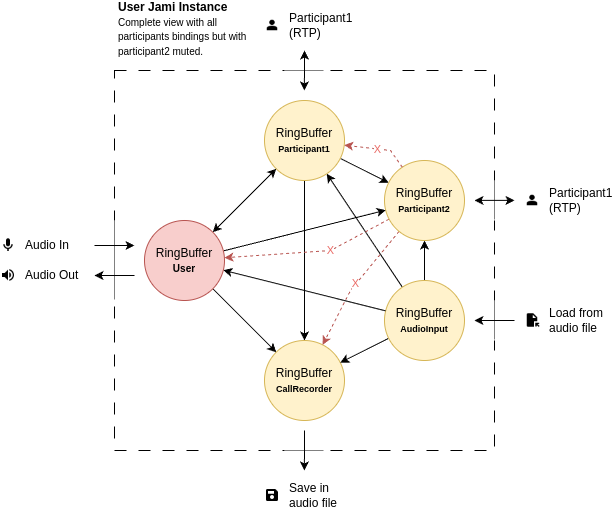

Moderation: Muting a participant

When a moderation action is issued to mute a participant:

That participant is unbound from all other participants (see red bindings).

The muted participant’s audio is no longer transmitted or received by anyone.